The Role of Motion-and-Visual Perception in Robot Place Learning and Navigation

Ph.D. Thesis

Abstract

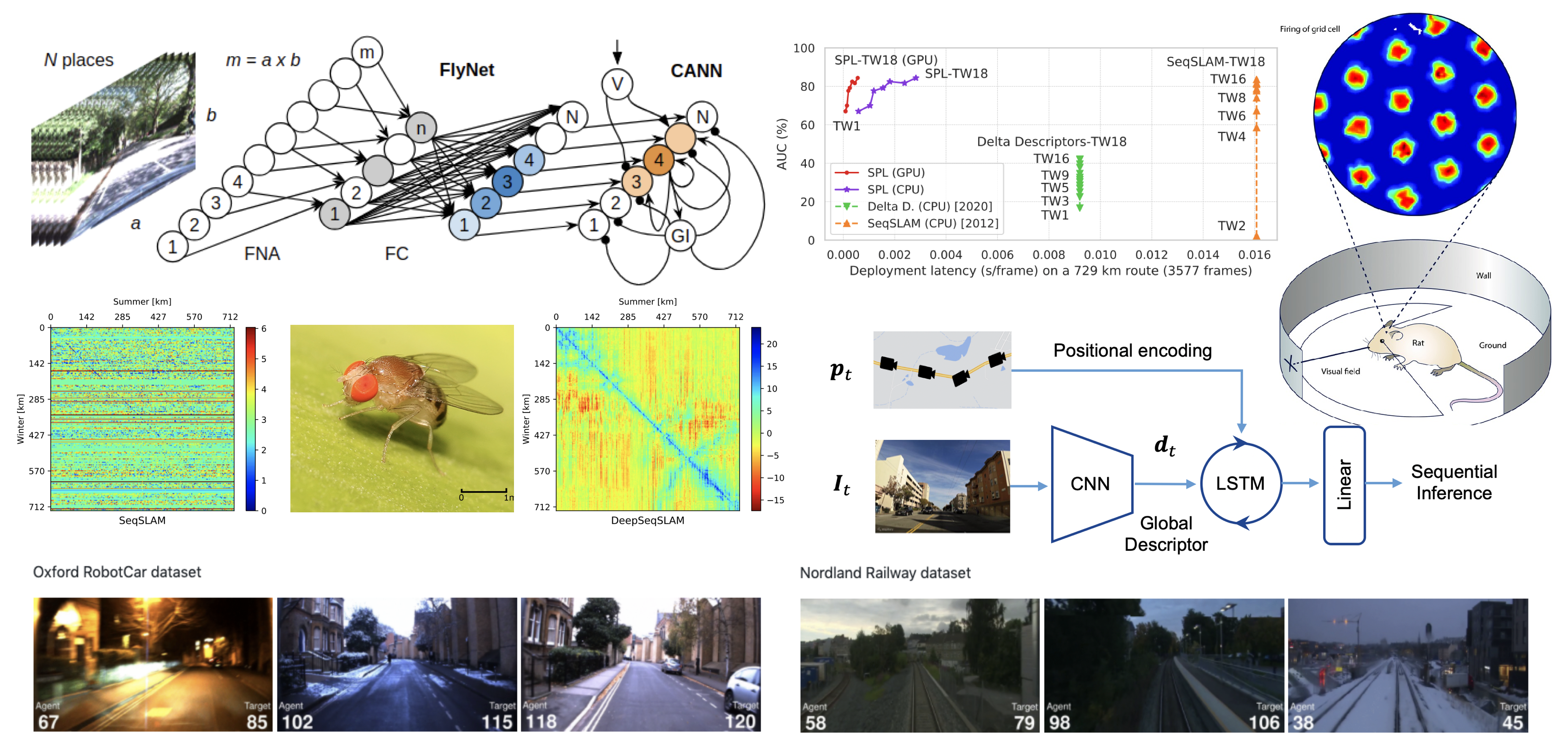

Autonomous robot navigation primarily relies on motion and visual perception (MVP) with feedback control from the environment. While vision-based robot navigation algorithms have largely been constrained to indoor, small outdoor, or simulation settings, their generalization under extreme appearance changes has not yet been extensively investigated. Conversely, traditional motion-based robot place recognition pipelines perform surprisingly well under severe variations in weather, climate or illumination; yet they exhibit significant disadvantages for navigation applications such as high latency and storage requirements. In this thesis, I study the role of joint MVP-based end-to-end learning in both place recognition and navigation within modern reinforcement learning and deep learning frameworks. For robot place recognition, I propose two types of high-performance neural architectures, FlyNet+CANN and DeepSeqSLAM, along with novel sequence processing methods for learning motion-driven representations from MVP data. Similar to classical two-stage heuristic-based pipelines, FlyNet+CANN requires both training and testing data for deployment but performs sequential place recognition using an entire neural implementation; yet its CANN component requires careful fine-tuning prior to deployment. In contrast, DeepSeqSLAM can be trained end-to-end to efficiently perform sequential place learning - an idea formally introduced here - from a single training traversal of an environment, while robustly generalizing under severe changing conditions; although its accuracy relies on precise motion estimation. For robot navigation, I introduce the interactive CityLearn simulation environment for all-weather, city-scale training and testing of navigation agents, based on simple move forward/backward commands, along with new sample-efficient neural architectures. CityLearn features over ten driving datasets from 60+ cities around the world, while allowing the use of any other dataset such as those from drones or underwater robots. Together, the proposed methods set new state-of-the-art performance standards with higher throughput and lower latency, representing a significant step towards the deployment of new robot learning-based SLAM and autonomous navigation systems in the real world.Thesis: [PDF] [ePrint]

PhD Thesis Defense

Bibtex

@phdthesis{MarvinChancanPhDThesis,

author = {Marvin Aldo Chancan Leon},

title = {The role of motion-and-visual perception in robot place learning and navigation},

school = {Queensland University of Technology},

year = {2022},

doi = {10.5204/thesis.eprints.229769},

keywords = {mobile robots, navigation, place recognition, motion estimation, deep learning, reinforcement learning, artificial neural networks, convolutional neural networks, recurrent neural networks, continuous attractor neural networks},

url = {https://eprints.qut.edu.au/229769/}

}